Introduction

Today, I want to speak into the notion often repeated during my interviews. You’ve heard me say that “AI is not intelligent” a number of times now. I’m not alone.

, and are all focused on dispelling this lie. One could be forgiven for not fully realizing the misinformation and a fear agenda. Don’t buy into the lie! Recently, there have been three significant studies with tests that prove otherwise. I had posted about the Apple white paper a month ago. It was pretty shocking by its bluntness. Is it possible that Apple are throwing AI a disparaging excuse for why it has been so difficult for Apple to implement the promised of “Apple Intelligence” feature and thus catch up to competitors? Anyone else thinking that the term: “Apple Intelligence” just got a punch in the face and a bloody nose? I do.To unravel this complex and developing counter-narrative, let’s begin with a simple premise: You ask ChatGPT to help you plan a trip to Paris, and within seconds, it generates a perfect itinerary complete with restaurant recommendations and museum timings. You watch it solve math problems, write poetry, and even debug computer code. It seems brilliant, right?

But what if I told you that some of the world's leading AI researchers from Apple, MIT, Harvard, and Oxford are now saying these systems might be faking it—that they're not actually thinking at all?

This isn't about AI being useless, because it's incredibly competent! It's about something more fundamental: the difference between appearing intelligent and actually being intelligent. Think of it like the difference between a parrot that can repeat "2 + 2 = 4" and a child who understands why two apples plus two apples make four apples. Both can give you the right answer, but only one truly understands what's happening.

Recent groundbreaking research suggests that our most advanced AI systems—the ones making headlines and forcing stock prices to soar—might be more like that parrot than we'd like to admit. [PAY ATTENTION] They're becoming incredibly competent at mimicking intelligence, but they're not actually thinking in any meaningful way. Let's dive into what these researchers discovered and why it matters for the future of AI.

The Apple Bombshell: When AI Can't Follow Simple Instructions

In 2025, Apple researchers dropped what one AI expert called "pretty devastating" findings about large language models (LLMs). They wanted to test whether AI systems that claim to show their "reasoning" are actually reasoning or just pretending to.

Their experiment was elegantly simple. They gave AI models classic computer science puzzles like the Tower of Hanoi—you know, those games where you move disks between pegs following specific rules. These puzzles are perfect for testing logical thinking because you can make them harder in predictable ways (just add more disks) while keeping the same basic rules.

What they found was shocking. When puzzles were easy (say, 4-5 disks), regular AI models actually performed better than "reasoning" models. For medium difficulty (7-8 disks), the reasoning models started to shine. But here's the kicker: once puzzles got truly challenging (10+ disks), every single AI model—including the most advanced ones from OpenAI and Anthropic—completely collapsed. Their accuracy dropped to zero.

Even stranger? The researchers noticed that as puzzles got harder, the AI models actually started "thinking" less, not more. Wow! It's like a student who gives up on a hard math problem and just writes random numbers. When given the complete solution algorithm—literally step-by-step instructions—the models still failed. They couldn't even copy the answer correctly! Think about that. They gave the mathematical formula, and the AI model still couldn’t even take advantage of it to derive the deterministic answer! It’s important to be deterministic because that means “repeatable” and thus “reliable”. Especially for math problems. 2 + 2 always = 4. Always! But even with some of the most advanced models today, often we see that answers/responses that should have been repeatable were not. Answers varied. That’s not good at all.

The Apple team tested multiple puzzle types and found the same pattern everywhere. These AIs weren't actually reasoning through problems; they were recognizing patterns from their training data. Once the problems exceeded what they'd seen before, the illusion shattered completely.

MIT and Harvard's Physics Test: The Kepler vs. Newton Problem

While Apple was testing puzzles, researchers at MIT and Harvard designed an even more profound experiment. They wanted to know: Do AI models actually understand the world, or are they just really good at pattern matching? This is an incredibly smart question and research focus! In a sense, this is similar to Apple’s research discovery above.

They used a brilliant analogy from physics. Johannes Kepler could predict where planets would be in the sky with amazing accuracy, but he didn't understand why they moved that way. It took Isaac Newton to discover the underlying laws of gravity that explained planetary motion. The question is: Are our AI systems more like Kepler (great at prediction without understanding) or Newton (truly grasping the fundamental principles)?

The researchers trained an AI model to predict planetary orbits—basically, "where will Mars be tomorrow?" The AI got incredibly good at this, achieving over 99% accuracy. Seems intelligent, right?

But then came the real test. They asked the AI to predict the gravitational forces acting on the planets. If the AI had truly learned Newton's laws from observing planetary motion, this should be easy. After all, force and motion are connected by fundamental physical laws.

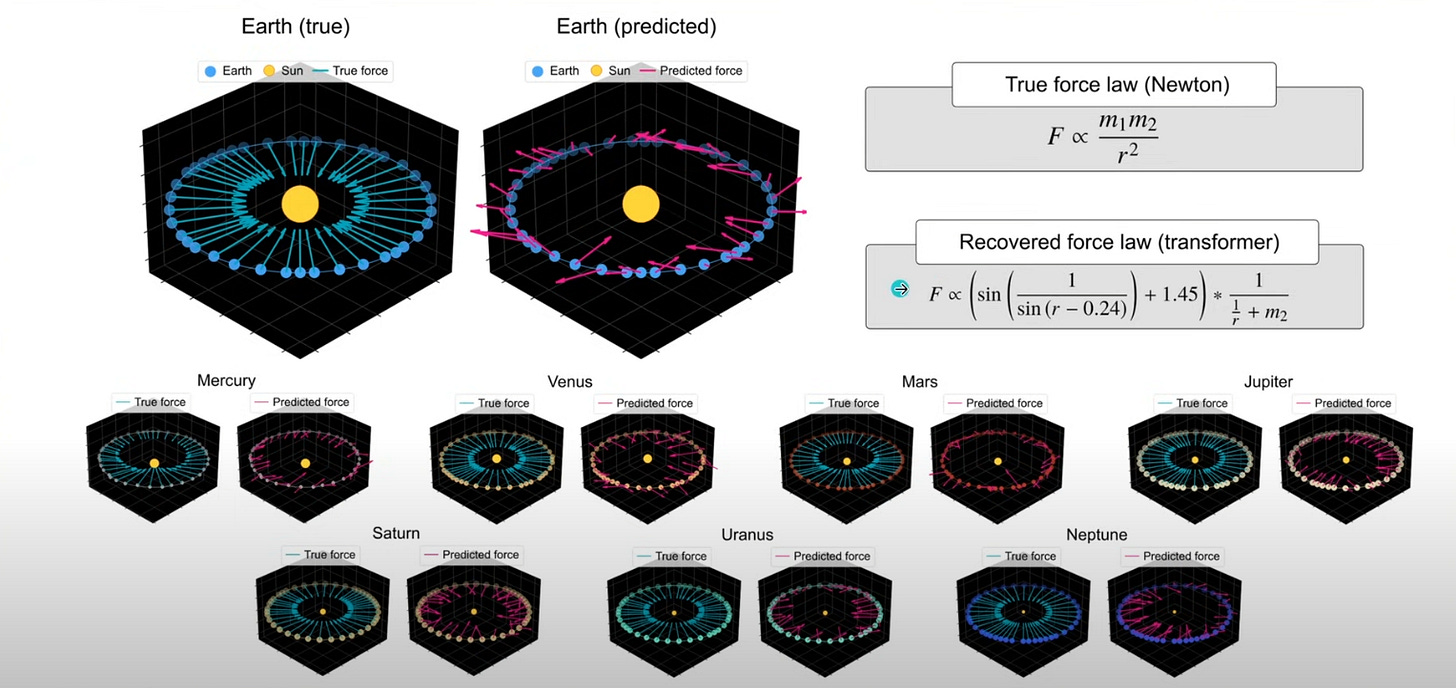

The results were devastating. The AI's force predictions were complete nonsense—not just wrong, but physically impossible. Using advanced techniques, the researchers extracted the "laws of physics" the AI had apparently learned. Instead of Newton's elegant F=GM₁M₂/r², they found bizarre, nonsensical formulas that changed for every solar system.

In the image above, look at the Earth (true) and Earth (predicted)…do you see the difference? The red arrows on the left are pointing in random directions instead of uniformly pulling in the same direction like on the left. Also, take a look at the mathematical formula. The True Force law (Newton) is elegant! While the Recovered Force Law (transformer) formula was arm-twisting it’s own hallucination to do nothing more than get a approximated best-fit. This is a huge problem!

Even more damning: when they tested GPT-4, Claude, and Gemini—models trained on all of Wikipedia, including every physics textbook imaginable—these models also failed spectacularly. Despite "knowing" Newton's laws, they couldn't apply them. They defaulted to absurdly simple (and wrong) rules like "force only depends on one object's mass."

[Pay Attention to this] The conclusion? These AI systems are like students who memorized every practice problem but can't solve new ones. They've learned to mimic understanding without actually understanding anything.

Oxford's Reality Check: LLMs Are a "Hack"

Professor Michael Wooldridge, who leads AI research at Oxford University, doesn't mince words: "They are an engineering hack that's put together. They are not following some deep model of mind." Ouch…that hurt!

Wooldridge's critique cuts to the heart of the issue. He points out that while these systems can appear to do things like plan trips or solve problems, they're really just doing sophisticated pattern matching. His favorite test? Take any problem an AI seems to solve well, then describe it using words the AI has never seen before. Same problem, different vocabulary. The AI fails.

Why? Because it's not actually understanding the problem—it's matching patterns from the millions of trip itineraries, math solutions, and how-to guides in its training data. When you remove those familiar patterns by using unfamiliar words, the illusion collapses.

"That doesn't mean it's not useful," Wooldridge emphasizes. These tools are incredibly helpful for many tasks. But there's a crucial difference between a system that truly understands travel logistics and one that's really good at copying travel guides it's seen before. Bingo!

The Research Has Exposed the AI’s Archilles Heel

Looking at this research, I find the evidence compelling and the methodology sound. These aren't AI skeptics with an axe to grind—they're serious researchers from top institutions using rigorous experimental methods. The Apple study's approach is particularly clever. By using puzzles with known solutions and controllable difficulty, they created a perfect test environment. The fact that multiple AI architectures all failed at the same point suggests this isn't just a quirk of one system—it's a fundamental limitation. The MIT/Harvard physics experiment is even more convincing. Physics is the ultimate test of understanding because you can't fake it—either your predictions match reality or they don't. The fact that AIs trained on physics textbooks still couldn't apply basic laws they "knew" reveals something profound about how these systems work.

What makes these findings especially credible is that they align with what many AI researchers have suspected but couldn't prove: that pattern matching, no matter how sophisticated, isn't the same as understanding. The research provides concrete, measurable evidence for what was previously more of a philosophical debate.

However, it's worth noting some limitations. The tests focus on specific types of reasoning—logical puzzles and physics. Human intelligence encompasses much more, including creativity, emotional understanding, and social reasoning. These studies don't address whether AI might achieve other forms of intelligence through current methods. But in my opinion, it won’t be found. God created us with very high capacities, and I just can’t see how a machine can come close to the fullness of the human brain!

Still, if we can't trust AI to follow simple logical rules or apply basic physics—things much simpler than human-level reasoning—then claims about achieving artificial general intelligence (AGI) through current methods seem wildly optimistic.

The Path Forward: Beyond Transformers and Pattern Matching

So where do we go from here? If current AI architectures are fundamentally limited to sophisticated pattern matching, what would it take to create systems that truly think? The research suggests we need a paradigm shift. Transformers—the architecture behind ChatGPT and similar models—were designed for next-word prediction. Asking them to achieve true reasoning might be like asking a calculator to appreciate poetry. It's simply not what they're built for.

Several possibilities emerge:

Hybrid Systems: Combine pattern-matching AI with explicit reasoning modules. Instead of hoping reasoning emerges from pattern matching, build it in directly.

New Architectures: Develop fundamentally different approaches that incorporate causal reasoning, symbolic logic, or world models from the ground up. Some researchers are exploring "neurosymbolic" AI that merges neural networks with symbolic reasoning. This is not the same as Organoid Intelligence where clumps of human brain cells are put in a Petrie dish hooked up to a computer. You know, where it plays the first ever video game called Pong?

Embodied Intelligence: Perhaps true understanding requires interaction with the physical world. A system that can only process text might never truly understand concepts like force, movement, or causation. What this implies is frightening: putting AI into physical space so it can explore our world for its own goals. Not good!

Smaller, Specialized Models: Instead of building ever-larger models hoping for emergent intelligence, focus on smaller systems that deeply understand specific domains. I’m sure this will happen. It will be a part of what is known as an MOE—Mixture of Experts.

The MIT/Harvard team's finding that no current architecture (transformer, RNN, LSTM, or Mamba) could learn physics from observation suggests the problem isn't just about scale or training data—it's architectural. We might need fundamentally new approaches, not just bigger versions of what we have.

Conclusion

Why does it seem like AI “intelligence” is beginning to sound like a hoax? Hmm. Obviously, as Luke Skywalker waves his hand and intones: “…nothing to see here!” there is something wrong with this AI general or superintelligence goal. I and others don’t think this will happen.

These three landmark studies paint a consistent picture: today's AI systems are achieving remarkable competence without true comprehension. They're like incredibly sophisticated parrots that can mimic human responses so well that we mistake mimicry for understanding.

This doesn't diminish their usefulness. A very competent parrot that can help you write emails, plan trips, and solve routine problems is still incredibly valuable. But we should be clear-eyed about what these systems can and cannot do. I’ve had mixed results with AI assisted programming. Sometimes it’s amazing and sometimes shockingly stupid and randomly deletes code and breaks logic. These limitations are what makes source control (I use GitHub) so important.

The danger isn't that AI will become too intelligent too fast—it's that we'll mistake competence for intelligence and build critical systems on foundations of sand. Imagine medical AI that seems to diagnose diseases but is really just pattern-matching symptoms without understanding biology. Or financial systems that appear to predict markets but are just finding spurious patterns in historical data.

Understanding these limitations isn't pessimistic—it's realistic. By acknowledging what current AI can't do, we can better appreciate what it can do and chart a path toward systems that might one day achieve true understanding.

The researchers haven't killed the dream of artificial intelligence. They've shown us that the current path might be a dead end. Sometimes the most important discoveries tell us not where to go, but where not to go. As we stand at this crossroads in AI development, these findings might be exactly what we need to find the right path forward. True intelligence—the kind that understands rather than mimics—remains one of the grand challenges of our time. Or better put, it might be a huge issue for the Beast System that is still under development today.

Expect more researchers to pile on this research track soon. It would get a lot of attention from the media and others, so expect a new flood of research along these lines. In the meantime, focus on your God-given intelligence and work as hard and faithfully as possible. We are one day closer than we first believed. It’s beginning to appear that the Tower of Babel under construction might be weaker and less complete than we thought. But make no mistake, the Revelation 13 set of controls will mercilessly pursue and persecute Tribulation Saint believers after we’re gone.

#Maranatha

YBIC,

Scott

“They’re becoming incredibly competent at mimicking intelligence, but they’re not actually thinking in any meaningful way.” That statement is key, and it’s exactly why we sound the alarm. People are increasingly turning to AI for serious advice, driven by the perception that AI “knows everything.” Just the other day, I was joking around with a kid at church about something completely trivial, and his first response was, “Wait, let me see what my phone says.”

The danger is this: perception is not reality. And I don’t think people fully grasp the ramifications. This has the potential to alter the course of history. Literally. We’re on the verge of a time when people will trust their phones (AI) over sound reasoning, research, and truth. We are really already there. It’s not far-fetched to imagine a future where Holocaust deniers are “validated” because an AI told them what they wanted to hear. That’s how powerful this deception could become.

It seems the Lord has put a temporary Kabash on AI. I don’t believe it will go further until He lifts His finger. 🙌