DISCLAIMER: The following is my opinion, based on my research and the research of others. I am not an authority on such matters. But I am concerned. Take what you see with a grain of salt and seek the guidance from others more qualified that myself.

Notes from Scott

PAY ATTENTION. THIS IS A WARNING!

Following up on my last three posts on how to be smart in this season and guard against AI intrusion into our lives and privacy, let me continue to show you examples of what to look out for. This time, we will look at Adobe Systems, the number one creative design software suite in the world. We will learn what exactly happened last June and the controversy it caused in Adobe’s user community. It seriously eroded user confidence that the “artistic work” that they create (often on behalf of their clients), became ingested into Adobe’s LLM generative AI models.

Think about that. If you feed other people’s intellectual property into your own AI without compensation, so that the AI becomes capable of reproducing the work on its own and gets better and better at it with each generation, that is essentially planned obsolescence. The artist and creatives—by using Adobe products—were making their own livelihoods obsolete. It’s unbelievable.

Summary of what happened

In June 2024, Adobe faced significant backlash from its user base following an update to its Terms of Service (ToS). The controversy erupted when users were prompted to accept new terms for their Creative Suite applications, which included language that many found concerning. The updated ToS stated that Adobe "may access your content through both automated and manual methods" and could use "techniques such as machine learning" to analyze content. This vague wording sparked widespread alarm among creators, who feared that Adobe might use their work to train AI models or access confidential projects without consent here and here.

The backlash spread rapidly across social media platforms, with users expressing concerns (more like fury) about privacy invasion, potential misuse of their content, and the implications for projects under non-disclosure agreements. Longtime users had had enough and cancelled their Adobe subscriptions.

In response to the uproar, Adobe quickly issued a statement to clarify its position. The company emphasized that it does not train generative AI models on customer content, does not assume ownership of users' work, and does not access customer content beyond legal requirements. I think this falls under too little too late category. A lot of damage was done and Adobe struggled to regain the narrative.

On June 24, 2024, Adobe released updated Terms of Service that explicitly addressed the concerns raised by users. The new terms included clear statements on content ownership, explicit prohibition on using user content for AI training (with an exception for Adobe Stock), and enhanced user rights and opt-out options. Adobe also added summary sections to each ToS section for easier understanding. Give them some credit, they did jump all over this.

This incident highlighted the growing tension between tech companies and content creators, especially concerning AI and data usage. It underscored the importance of transparency, clear communication, and user-friendly language in terms of service agreements. While Adobe's quick response and subsequent ToS update helped mitigate some of the damage, trust issues persisted among some users.

Read some of the comments on Reddit here. Warning about some language.

It all began with an update to the Adobe Terms of Service

Adobe's recent update to its Terms of Service has sparked significant controversy and concern among users. Here are the specific concerns raised by users regarding Adobe's new Terms of Service:

Privacy and Data Access

Unauthorized content access: Users are worried that Adobe may access their private and sensitive work without consent here and here. This includes concerns about Adobe viewing confidential projects or work protected by non-disclosure agreements (NDAs) here.

AI training: There's apprehension that Adobe might use users' creative work as training data for their AI models, particularly Firefly, without proper credit or compensation here.

Vague language: The ambiguous wording in the updated terms, such as "we may access your content through both manual and automated methods," has fueled speculation about the extent of Adobe's access to user content here.

Intellectual Property and Ownership

Regarding content ownership, some users interpreted the terms as potentially allowing Adobe to assume ownership of their work, despite Adobe's assurances to the contrary here and here. But then it gets worse as concerns were raised about the "worldwide royalty-free" license granted to Adobe, which some users feared could allow the company to use their work without compensation.

Legal and Professional Implications

1. NDA violations: Users working on confidential projects worry that Adobe's access to their content might cause them to violate non-disclosure agreements here and here.

2. Client trust: Some professionals expressed concern that clients might prohibit the use of Adobe software if there's a risk of their content being accessed or analyzed by Adobe here.

Consent and Control and now the FTC is involved

One of the most significant issues that came to light as user’s began recoiling from Adobe products is they found it very hard to find a way to opt out (here) of AI intrusion and cancel their subscriptions. Not only were there surprising “early termination fees” that users did not have proper disclosure of before making their purchase, but it got so noisy that the FTC got involved, resulting in a lawsuit here. Additionally, there was considerable resentment about forced acceptance of a revised Terms of Service (here) to continue using the platform. Here is the opening on the brief:

The Federal Trade Commission is taking action against software maker Adobe and two of its executives, Maninder Sawhney and David Wadhwani, for deceiving consumers by hiding the early termination fee for its most popular subscription plan and making it difficult for consumers to cancel their subscriptions.

These concerns highlight the growing tension between user privacy, data ownership, and the development of AI technologies in the creative industry. Adobe's response and subsequent clarifications were quick, but there was a lot of damage done to the brand. There was a user revolt where artists were so angry it just pushed them over to Affinity Photo—a direct competitor that greatly capitalized on the controversy here.

How to be aware of Adobe AI Intrusion

By far the greatest concern I have is Adobe Acrobat, a read-only “portable document format” that they brought to mark many years ago. It is wildly successful and is recognized by the file extension: .PDF

In the screenshot below, you can see the hooks which are prompting you to “give permission” for AI features to “help you” with your document. THINK with me for a minute. If you get AI to review, summarize, alter, augment based on the AI assistant, that document goes into the cloud and off your computer. That is where all the sophisticated servers are. They cannot process AI on your desktop (PC/MAC) at least for now. I don’t know about what will happen in the future.

Why did I use the “Post Rapture Emergency Message” script that Gary Ray and I put together in Rapture Kit 2.1 as a sample screenshot below? Well, I’m hoping that a seed will be planted by whoever reads this!

Rapture Kits are easy to download here, and you can make as many as you

want on a 32GB USB drive and distribute them freely.

PDF Document showing where the triggers are to activate AI features:

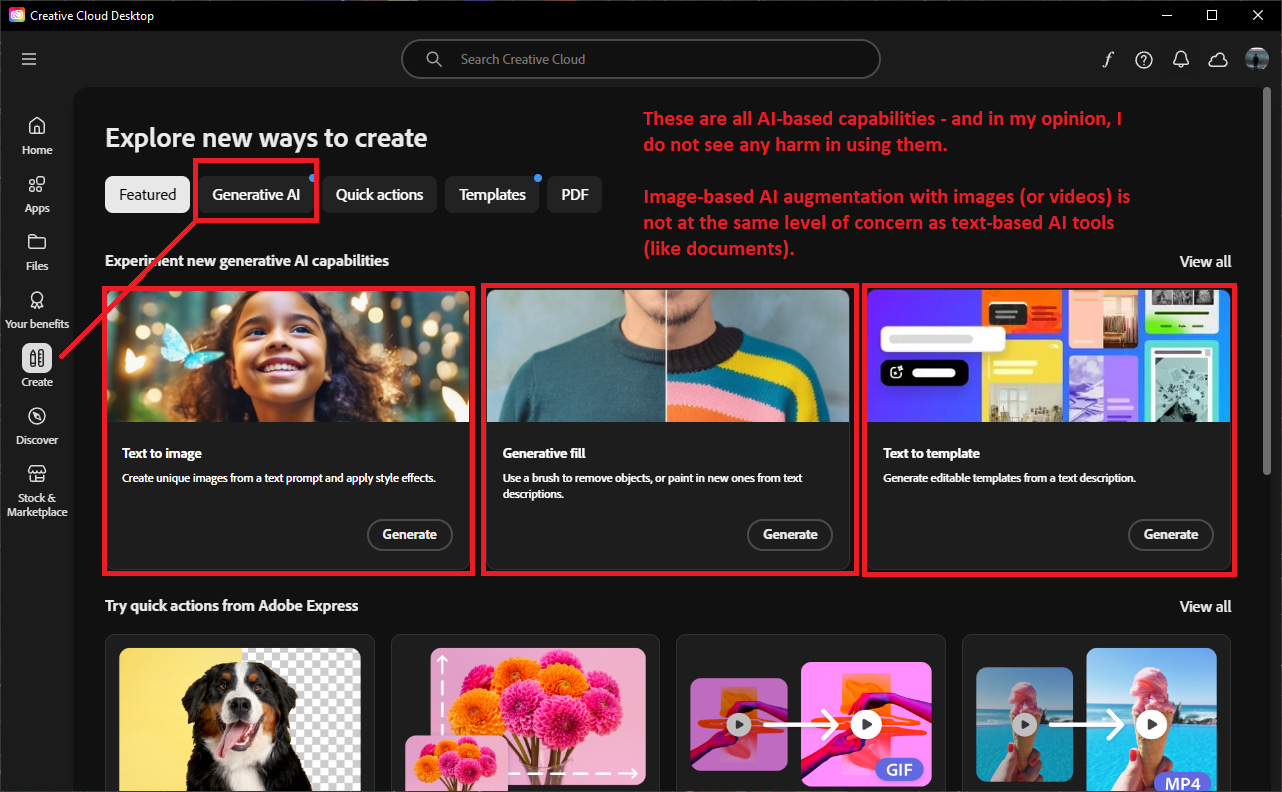

Generative AI in Adobe Products

While I am not going to recommend using AI features in sensitive PDF documents (see above), it’s not as clear about generative AI features for images. I am generally supportive of tooling that save time in Photoshop, Premiere, InDesign, etc. I do not see the harm in using these features. You must make your own decision. Remember, we’ve already talked about work requirements where you may not have a choice. I would be peaceful about image augmentation using AI.

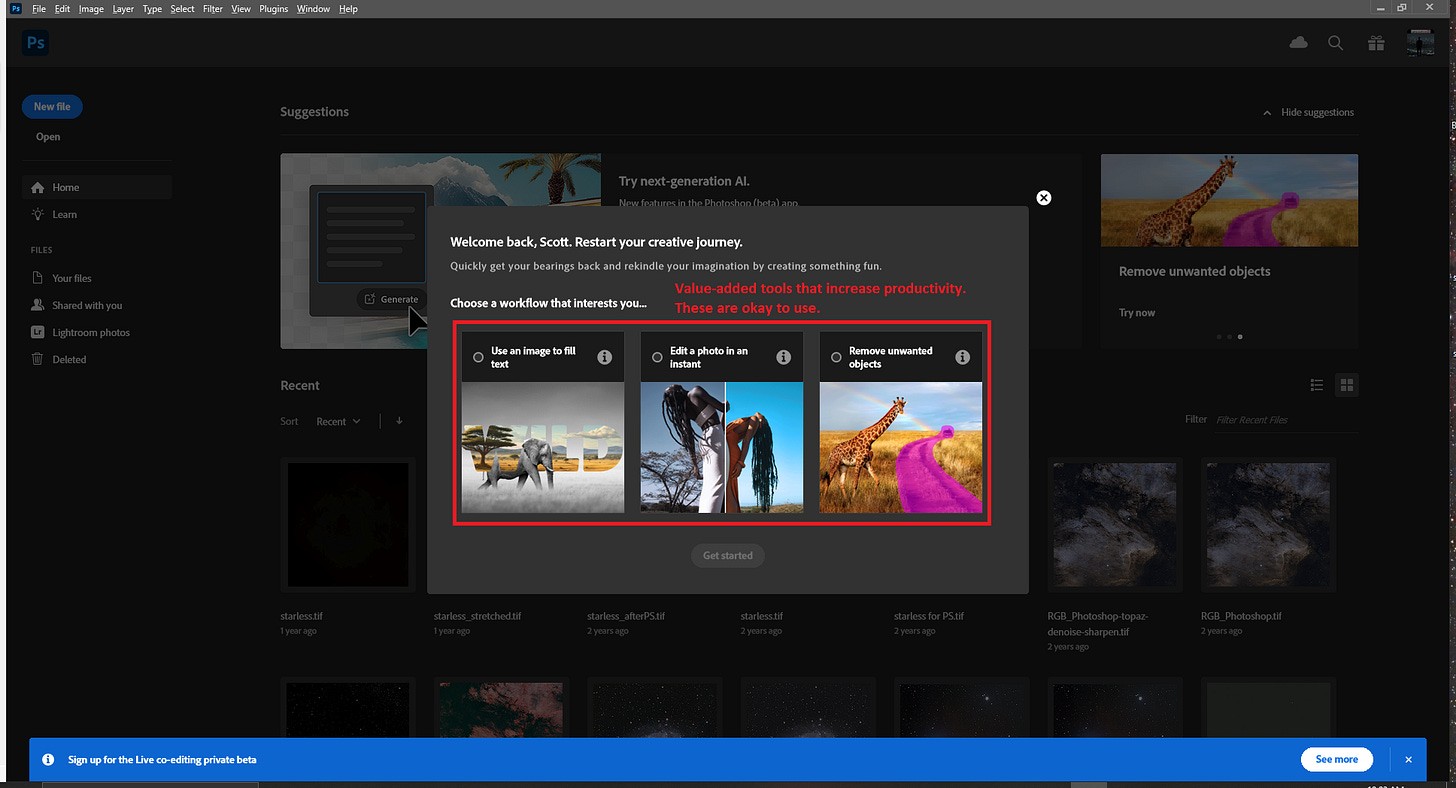

The number of nooks and crannies they try to present “using AI features” is nearly in your face. This is the splash from Photoshop:

Here’s another example showing three hooks that all require permission to use AI and are powerful image manipulation: (a) image fill, (b) intelligent photo editing, and (c) removing unwanted distractions in photos. These are very powerful features and there is certainly a big uplift in productivity. Again, I do not see any warning signs here.

Summary

This post is focusing on Adobe Creative Suite products and has two main points. The first is how aggressive companies have been to get a hold of training data. They have operated in the gray and now the FTC has sent a warning shot to all companies that are skirting user disclosure, altering Terms of Services, and that are less than transparent about what they are doing with other people’s creative assets and intellectual property. The second section is where you will see AI options, how to recognize them, what thoughts should go through your mind, etc.

I trust that you find the Best Practices on AI series useful.

YBIC,

Scott

Scott,

Thank you for your diligence in preparing and sharing this information with us. I feel like I'm back in kindergarten again, because I know so little about these things.

Thank you, Scott. I've just been using their Web app to produce raw images which I can use in my work. That seems to be clear of this stuff. I've prayed my whole Christian life to be able to see these things play out. What a ride it's become. The evil is appalling. But the Lord still seems amused at their flailing about. I wonder if we'll even be interested in discussing it all once we're with the Lord.