These are some of the foundational mathematical formulas commonly used in AI. Math, plain and not-so-simple. What’s important here is the confirmation that math is a known “truth” that no demon can override. Why? Well, because the LORD created the structure and rules and principles that govern our universe. The scientific community is always lagging behind the Creator! Please pray for these scientists! When they see clearly, they can become powerful influencers to bring the truth of God’s Word and the Gospel to many that don’t believe.

1. Linear Regression (Predictive Modeling)

Used in supervised machine learning to predict a numeric outcome from input variables.

y=β0+β1x1+β2x2+⋯+βnxn+ϵy = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + \dots + \beta_n x_n + \epsilon

yy: target variable (output)

x1,x2,...,xnx_1, x_2, ..., x_n: feature variables (inputs)

β0\beta_0: intercept

β1,β2,...,βn\beta_1, \beta_2, ..., \beta_n: coefficients

ϵ\epsilon: error term

2. Logistic Regression (Binary Classification)

Estimates probabilities for classification tasks.

P(y=1∣X)=11+e−(β0+β1x1+β2x2+⋯+βnxn)P(y=1|X) = \frac{1}{1 + e^{-(\beta_0 + \beta_1 x_1 + \beta_2 x_2 + \dots + \beta_n x_n)}}

P(y=1∣X)P(y=1|X): Probability of class 1

ee: Euler’s number (~2.71828)

3. Activation Functions (Neural Networks)

Activation functions introduce non-linearity in neural networks. A common one is the Sigmoid function:

σ(x)=11+e−x\sigma(x) = \frac{1}{1 + e^{-x}}

Another common activation function is ReLU (Rectified Linear Unit):

ReLU(x)=max(0,x)ReLU(x) = \max(0, x)

4. Gradient Descent (Optimization Algorithm)

Used for minimizing a loss function during model training:

θj:=θj−α∂∂θjJ(θ)\theta_j := \theta_j - \alpha \frac{\partial}{\partial \theta_j} J(\theta)

θj\theta_j: parameter being optimized

α\alpha: learning rate

J(θ)J(\theta): cost function to minimize

5. Mean Squared Error (Regression Loss)

A common loss function measuring the average squared difference between predicted and actual values:

MSE=1n∑i=1n(yi−yi^)2MSE = \frac{1}{n}\sum_{i=1}^{n}(y_i - \hat{y_i})^2

yiy_i: Actual values

yi^\hat{y_i}: Predicted values

nn: Number of observations

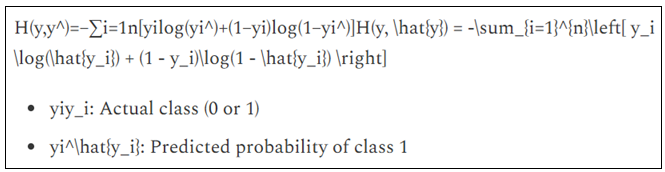

6. Cross-Entropy Loss (Classification Loss)

Used for measuring the performance of a classification model:

H(y,y^)=−∑i=1n[yilog(yi^)+(1−yi)log(1−yi^)]H(y, \hat{y}) = -\sum_{i=1}^{n}\left[ y_i \log(\hat{y_i}) + (1 - y_i)\log(1 - \hat{y_i}) \right]

yiy_i: Actual class (0 or 1)

yi^\hat{y_i}: Predicted probability of class 1

7. Softmax Function (Multiclass Probability Distribution)

Used to transform outputs into probabilities summing up to 1 in multiclass classification:

Softmax(xi)=exi∑j=1Kexj\text{Softmax}(x_i) = \frac{e^{x_i}}{\sum_{j=1}^{K} e^{x_j}}

xix_i: Input value for class ii

KK: Total number of classes

8. Bayes’ Theorem (Probabilistic Modeling)

Fundamental theorem for probabilistic inference:

P(A∣B)=P(B∣A)P(A)P(B)P(A|B) = \frac{P(B|A)P(A)}{P(B)}

P(A∣B)P(A|B): Probability of event AA given event BB

P(B∣A)P(B|A): Probability of event BB given event AA

P(A),P(B)P(A), P(B): Independent probabilities of events AA and BB

These equations represent some of the foundational mathematical principles behind modern AI. Bonus points to anyone that could explain these to me. I only have an MBA and I’m a little over my skis here.

YBIC,

Scott